Lecture 1: Course Introduction#

UBC 2024-25

Imports#

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import os

import sys

sys.path.append(os.path.join(os.path.abspath(".."), "code"))

from IPython.display import HTML, display

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import train_test_split

from sklearn.pipeline import make_pipeline

plt.rcParams["font.size"] = 16

pd.set_option("display.max_colwidth", 200)

%matplotlib inline

DATA_DIR = '../data/'

Learning outcomes#

From this lecture, you will be able to

Explain the motivation behind study machine learning.

Briefly describe supervised learning.

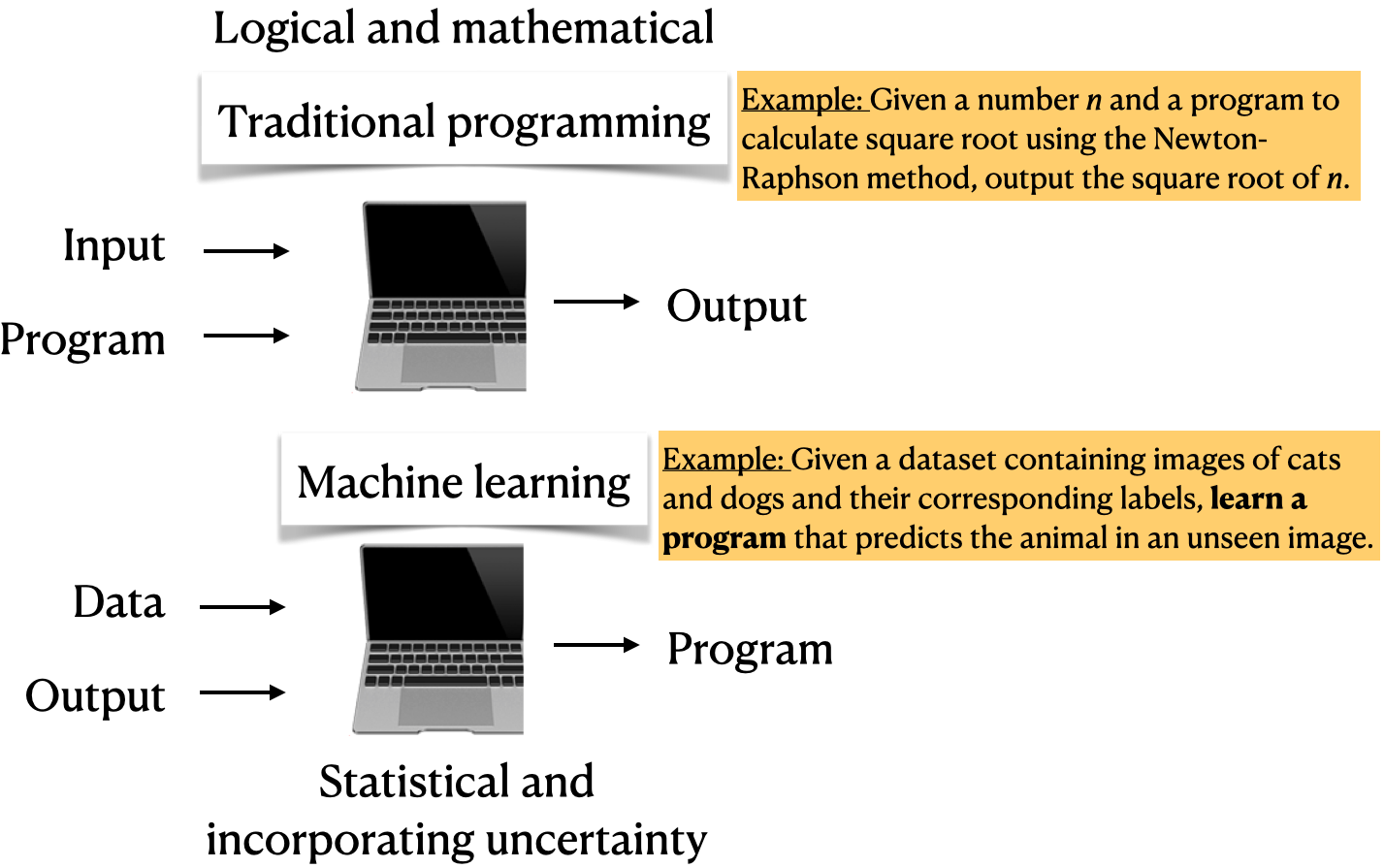

Differentiate between traditional programming and machine learning.

Assess whether a given problem is suitable for a machine learning solution.

Characters in this course?#

CPSC 330 teaching team (instructors and the TAs)

Eva (a fictitious enthusiastic student)

And you all, of course 🙂!

Meet Eva (a fictitious persona)!#

Eva is among one of you. She has some experience in Python programming. She knows machine learning as a buzz word. During her recent internship, she has developed some interest and curiosity in the field. She wants to learn what is it and how to use it. She is a curious person and usually has a lot of questions!

Why machine learning (ML)? [video]#

See also

Check out the accompanying video on this material.

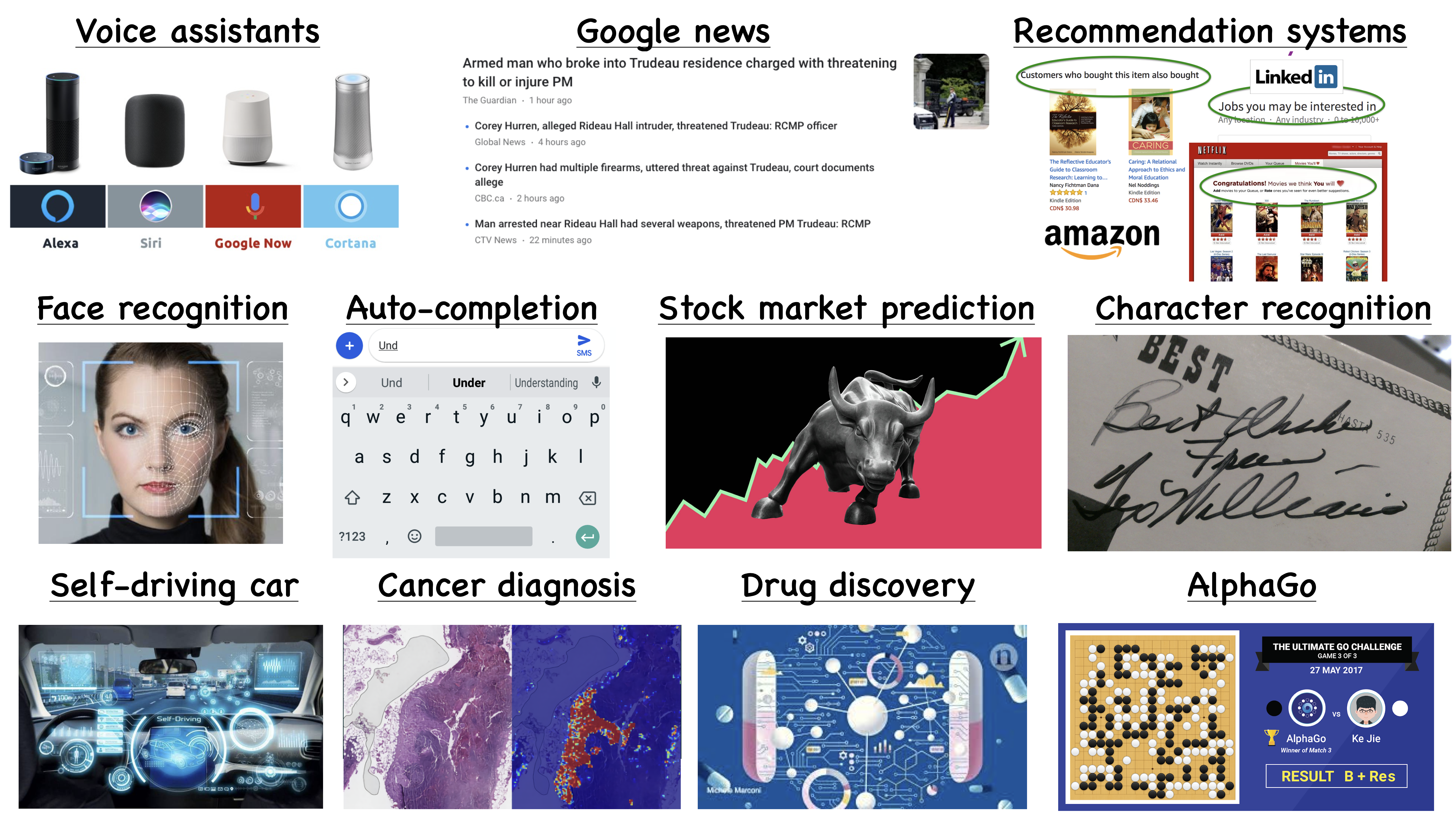

Prevalence of ML#

Let’s look at some examples.

Saving time and scaling products#

Imagine writing a program for spam identification, i.e., whether an email is spam or non-spam.

Traditional programming

Come up with rules using human understanding of spam messages.

Time consuming and hard to come up with robust set of rules.

Machine learning

Collect large amount of data of spam and non-spam emails and let the machine learning algorithm figure out rules.

With machine learning, you’re likely to

Save time

Customize and scale products

Supervised machine learning#

Types of machine learning#

Here are some typical learning problems.

Supervised learning (Gmail spam filtering)

Training a model from input data and its corresponding targets to predict targets for new examples.

Unsupervised learning (Google News)

Training a model to find patterns in a dataset, typically an unlabeled dataset.

Reinforcement learning (AlphaGo)

A family of algorithms for finding suitable actions to take in a given situation in order to maximize a reward.

Recommendation systems (Amazon item recommendation system)

Predict the “rating” or “preference” a user would give to an item.

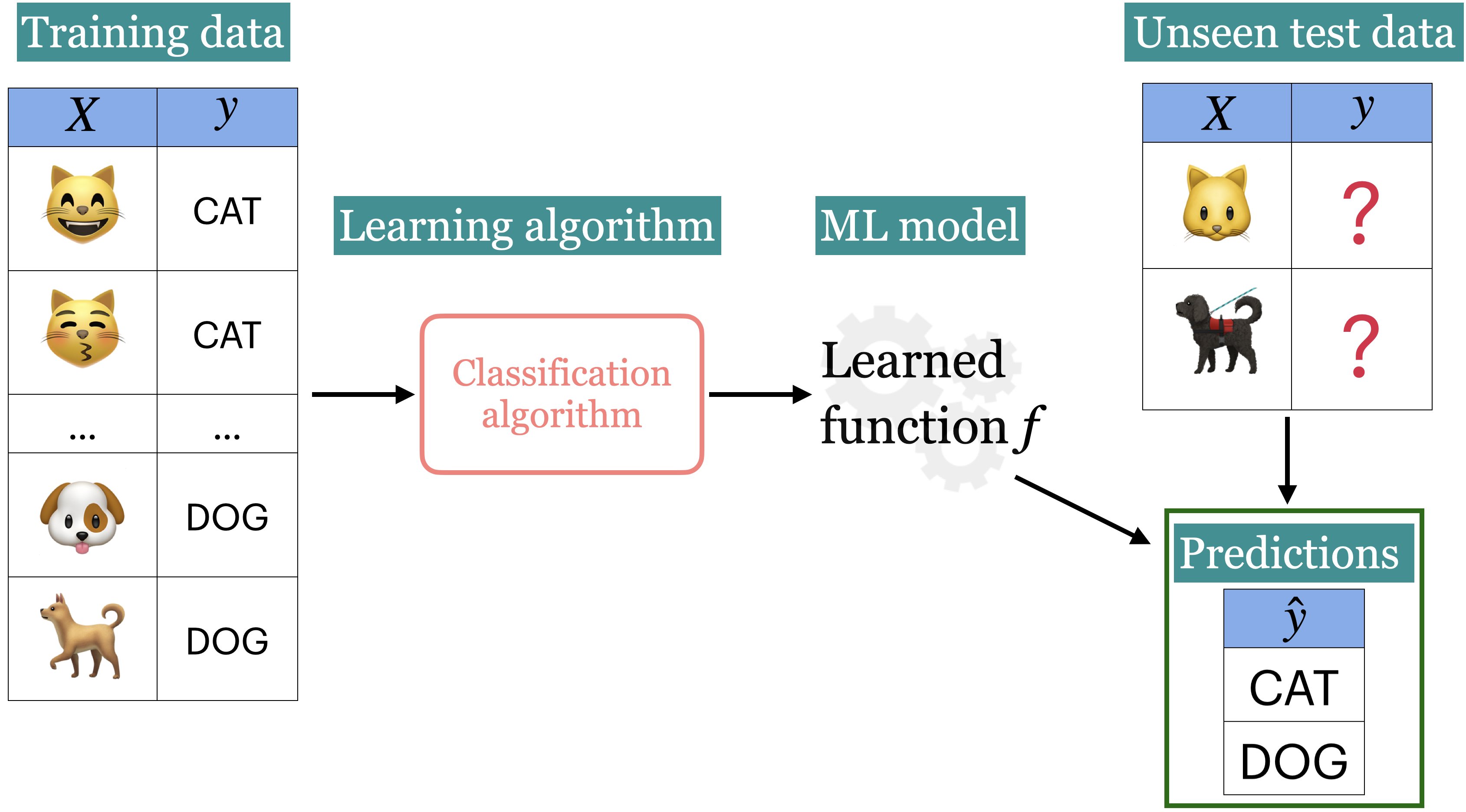

What is supervised machine learning (ML)?#

Training data comprises a set of observations (\(X\)) and their corresponding targets (\(y\)).

We wish to find a model function \(f\) that relates \(X\) to \(y\).

We use the model function to predict targets of new examples.

Example: Predict whether a message is spam or not#

Input features \(X\) and target \(y\)#

Note

Do not worry about the code and syntax for now.

Note

Download SMS Spam Collection Dataset from here.

Training a supervised machine learning model with \(X\) and \(y\)#

| target | sms |

|---|---|

| spam | LookAtMe!: Thanks for your purchase of a video clip from LookAtMe!, you've been charged 35p. Think you can do better? Why not send a video in a MMSto 32323. |

| ham | Aight, I'll hit you up when I get some cash |

| ham | Don no da:)whats you plan? |

| ham | Going to take your babe out ? |

| ham | No need lar. Jus testing e phone card. Dunno network not gd i thk. Me waiting 4 my sis 2 finish bathing so i can bathe. Dun disturb u liao u cleaning ur room. |

X_train, y_train = train_df["sms"], train_df["target"]

X_test, y_test = test_df["sms"], test_df["target"]

clf = make_pipeline(CountVectorizer(max_features=5000), LogisticRegression(max_iter=5000))

clf.fit(X_train, y_train);

Predicting on unseen data using the trained model#

pd.DataFrame(X_test[0:4])

| sms | |

|---|---|

| 3245 | Funny fact Nobody teaches volcanoes 2 erupt, tsunamis 2 arise, hurricanes 2 sway aroundn no 1 teaches hw 2 choose a wife Natural disasters just happens |

| 944 | I sent my scores to sophas and i had to do secondary application for a few schools. I think if you are thinking of applying, do a research on cost also. Contact joke ogunrinde, her school is one m... |

| 1044 | We know someone who you know that fancies you. Call 09058097218 to find out who. POBox 6, LS15HB 150p |

| 2484 | Only if you promise your getting out as SOON as you can. And you'll text me in the morning to let me know you made it in ok. |

Note

Do not worry about the code and syntax for now.

pred_dict = {

"sms": X_test[0:4],

"spam_predictions": clf.predict(X_test[0:4]),

}

pred_df = pd.DataFrame(pred_dict)

pred_df.style.set_properties(**{"text-align": "left"})

| sms | spam_predictions | |

|---|---|---|

| 3245 | Funny fact Nobody teaches volcanoes 2 erupt, tsunamis 2 arise, hurricanes 2 sway aroundn no 1 teaches hw 2 choose a wife Natural disasters just happens | ham |

| 944 | I sent my scores to sophas and i had to do secondary application for a few schools. I think if you are thinking of applying, do a research on cost also. Contact joke ogunrinde, her school is one me the less expensive ones | ham |

| 1044 | We know someone who you know that fancies you. Call 09058097218 to find out who. POBox 6, LS15HB 150p | spam |

| 2484 | Only if you promise your getting out as SOON as you can. And you'll text me in the morning to let me know you made it in ok. | ham |

We have accurately predicted labels for the unseen text messages above!

(Supervised) machine learning: popular definition#

A field of study that gives computers the ability to learn without being explicitly programmed.

-- Arthur Samuel (1959)

ML is a different way to think about problem solving.

Examples#

Let’s look at some concrete examples of supervised machine learning.

Note

Do not worry about the code at this point. Just focus on the input and output in each example.

Example 1: Predicting whether a patient has a liver disease or not#

Input data#

Suppose we are interested in predicting whether a patient has the disease or not. We are given some tabular data with inputs and outputs of liver patients, as shown below. The data contains a number of input features and a special column called “Target” which is the output we are interested in predicting.

Note

Download the data from here.

| Age | Total_Bilirubin | Direct_Bilirubin | Alkaline_Phosphotase | Alamine_Aminotransferase | Aspartate_Aminotransferase | Total_Protiens | Albumin | Albumin_and_Globulin_Ratio | Target |

|---|---|---|---|---|---|---|---|---|---|

| 40 | 14.5 | 6.4 | 358 | 50 | 75 | 5.7 | 2.1 | 0.50 | Disease |

| 33 | 0.7 | 0.2 | 256 | 21 | 30 | 8.5 | 3.9 | 0.80 | Disease |

| 24 | 0.7 | 0.2 | 188 | 11 | 10 | 5.5 | 2.3 | 0.71 | No Disease |

| 60 | 0.7 | 0.2 | 171 | 31 | 26 | 7.0 | 3.5 | 1.00 | No Disease |

| 18 | 0.8 | 0.2 | 199 | 34 | 31 | 6.5 | 3.5 | 1.16 | No Disease |

Building a supervise machine learning model#

Let’s train a supervised machine learning model with the input and output above.

from lightgbm.sklearn import LGBMClassifier

X_train = train_df.drop(columns=["Target"])

y_train = train_df["Target"]

X_test = test_df.drop(columns=["Target"])

y_test = test_df["Target"]

model = LGBMClassifier(random_state=123, verbose=-1)

model.fit(X_train, y_train)

LGBMClassifier(random_state=123, verbose=-1)In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

LGBMClassifier(random_state=123, verbose=-1)

Model predictions on unseen data#

Given features of new patients below we’ll use this model to predict whether these patients have the liver disease or not.

HTML(X_test.reset_index(drop=True).to_html(index=False))

| Age | Total_Bilirubin | Direct_Bilirubin | Alkaline_Phosphotase | Alamine_Aminotransferase | Aspartate_Aminotransferase | Total_Protiens | Albumin | Albumin_and_Globulin_Ratio |

|---|---|---|---|---|---|---|---|---|

| 19 | 1.4 | 0.8 | 178 | 13 | 26 | 8.0 | 4.6 | 1.30 |

| 12 | 1.0 | 0.2 | 719 | 157 | 108 | 7.2 | 3.7 | 1.00 |

| 60 | 5.7 | 2.8 | 214 | 412 | 850 | 7.3 | 3.2 | 0.78 |

| 42 | 0.5 | 0.1 | 162 | 155 | 108 | 8.1 | 4.0 | 0.90 |

pred_df = pd.DataFrame({"Predicted_target": model.predict(X_test).tolist()})

df_concat = pd.concat([pred_df, X_test.reset_index(drop=True)], axis=1)

HTML(df_concat.to_html(index=False))

| Predicted_target | Age | Total_Bilirubin | Direct_Bilirubin | Alkaline_Phosphotase | Alamine_Aminotransferase | Aspartate_Aminotransferase | Total_Protiens | Albumin | Albumin_and_Globulin_Ratio |

|---|---|---|---|---|---|---|---|---|---|

| No Disease | 19 | 1.4 | 0.8 | 178 | 13 | 26 | 8.0 | 4.6 | 1.30 |

| Disease | 12 | 1.0 | 0.2 | 719 | 157 | 108 | 7.2 | 3.7 | 1.00 |

| Disease | 60 | 5.7 | 2.8 | 214 | 412 | 850 | 7.3 | 3.2 | 0.78 |

| Disease | 42 | 0.5 | 0.1 | 162 | 155 | 108 | 8.1 | 4.0 | 0.90 |

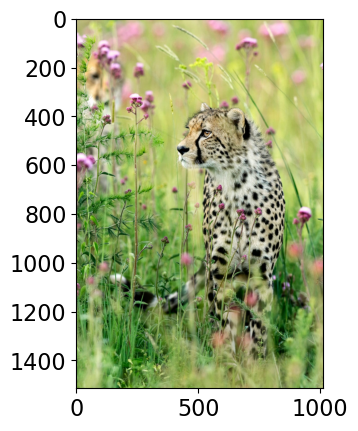

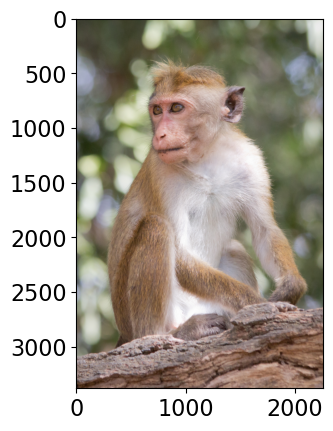

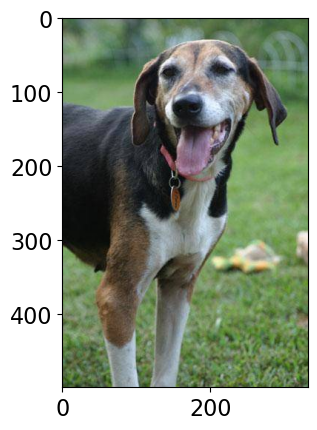

Example 2: Predicting the label of a given image#

Suppose you want to predict the label of a given image using supervised machine learning. We are using a pre-trained model here to predict labels of new unseen images.

Note

Assuming that you have successfully created cpsc330 conda environment on your computer, you’ll have to install torchvision in cpsc330 conda environment to run the following code. If you are unable to install torchvision on your laptop, please don’t worry,not crucial at this point.

conda activate cpsc330 conda install -c pytorch torchvision

import img_classify

from PIL import Image

import glob

import matplotlib.pyplot as plt

# Predict topn labels and their associated probabilities for unseen images

images = glob.glob(DATA_DIR + "test_images/*.*")

class_labels_file = DATA_DIR + 'imagenet_classes.txt'

for img_path in images:

img = Image.open(img_path).convert('RGB')

img.load()

plt.imshow(img)

plt.show();

df = img_classify.classify_image(img_path, class_labels_file)

print(df.to_string(index=False))

print("--------------------------------------------------------------")

Class Probability score

tiger cat 0.636

tabby, tabby cat 0.174

Pembroke, Pembroke Welsh corgi 0.081

lynx, catamount 0.011

--------------------------------------------------------------

Class Probability score

cheetah, chetah, Acinonyx jubatus 0.994

leopard, Panthera pardus 0.005

jaguar, panther, Panthera onca, Felis onca 0.001

snow leopard, ounce, Panthera uncia 0.000

--------------------------------------------------------------

Class Probability score

macaque 0.885

patas, hussar monkey, Erythrocebus patas 0.062

proboscis monkey, Nasalis larvatus 0.015

titi, titi monkey 0.010

--------------------------------------------------------------

Class Probability score

Walker hound, Walker foxhound 0.582

English foxhound 0.144

beagle 0.068

EntleBucher 0.059

--------------------------------------------------------------

Example 3: Predicting housing prices#

Suppose we want to predict housing prices given a number of attributes associated with houses.

Note

Download the data from here.

| target | bedrooms | bathrooms | sqft_living | sqft_lot | floors | waterfront | view | condition | grade | sqft_above | sqft_basement | yr_built | yr_renovated | zipcode | lat | long | sqft_living15 | sqft_lot15 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 509000.0 | 2 | 1.50 | 1930 | 3521 | 2.0 | 0 | 0 | 3 | 8 | 1930 | 0 | 1989 | 0 | 98007 | 47.6092 | -122.146 | 1840 | 3576 |

| 675000.0 | 5 | 2.75 | 2570 | 12906 | 2.0 | 0 | 0 | 3 | 8 | 2570 | 0 | 1987 | 0 | 98075 | 47.5814 | -122.050 | 2580 | 12927 |

| 420000.0 | 3 | 1.00 | 1150 | 5120 | 1.0 | 0 | 0 | 4 | 6 | 800 | 350 | 1946 | 0 | 98116 | 47.5588 | -122.392 | 1220 | 5120 |

| 680000.0 | 8 | 2.75 | 2530 | 4800 | 2.0 | 0 | 0 | 4 | 7 | 1390 | 1140 | 1901 | 0 | 98112 | 47.6241 | -122.305 | 1540 | 4800 |

| 357823.0 | 3 | 1.50 | 1240 | 9196 | 1.0 | 0 | 0 | 3 | 8 | 1240 | 0 | 1968 | 0 | 98072 | 47.7562 | -122.094 | 1690 | 10800 |

# Build a regression model

from lightgbm.sklearn import LGBMRegressor

X_train, y_train = train_df.drop(columns= ["target"]), train_df["target"]

X_test, y_test = test_df.drop(columns= ["target"]), train_df["target"]

model = LGBMRegressor()

#model = XGBRegressor()

model.fit(X_train, y_train);

# Predict on unseen examples using the built model

pred_df = pd.DataFrame(

# {"Predicted target": model.predict(X_test[0:4]).tolist(), "Actual price": y_test[0:4].tolist()}

{"Predicted_target": model.predict(X_test[0:4]).tolist()}

)

df_concat = pd.concat([pred_df, X_test[0:4].reset_index(drop=True)], axis=1)

HTML(df_concat.to_html(index=False))

| Predicted_target | bedrooms | bathrooms | sqft_living | sqft_lot | floors | waterfront | view | condition | grade | sqft_above | sqft_basement | yr_built | yr_renovated | zipcode | lat | long | sqft_living15 | sqft_lot15 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 345831.740542 | 4 | 2.25 | 2130 | 8078 | 1.0 | 0 | 0 | 4 | 7 | 1380 | 750 | 1977 | 0 | 98055 | 47.4482 | -122.209 | 2300 | 8112 |

| 601042.018745 | 3 | 2.50 | 2210 | 7620 | 2.0 | 0 | 0 | 3 | 8 | 2210 | 0 | 1994 | 0 | 98052 | 47.6938 | -122.130 | 1920 | 7440 |

| 311310.186024 | 4 | 1.50 | 1800 | 9576 | 1.0 | 0 | 0 | 4 | 7 | 1800 | 0 | 1977 | 0 | 98045 | 47.4664 | -121.747 | 1370 | 9576 |

| 597555.592401 | 3 | 2.50 | 1580 | 1321 | 2.0 | 0 | 2 | 3 | 8 | 1080 | 500 | 2014 | 0 | 98107 | 47.6688 | -122.402 | 1530 | 1357 |

To summarize, supervised machine learning can be used on a variety of problems and different kinds of data.

🤔 Eva’s questions#

At this point, Eva is wondering about many questions.

How are we exactly “learning” whether a message is spam and ham?

What do you mean by “learn without being explicitly programmed”? The code has to be somewhere …

Are we expected to get correct predictions for all possible messages? How does it predict the label for a message it has not seen before?

What if the model mis-labels an unseen example? For instance, what if the model incorrectly predicts a non-spam as a spam? What would be the consequences?

How do we measure the success or failure of spam identification?

If you want to use this model in the wild, how do you know how reliable it is?

Would it be useful to know how confident the model is about the predictions rather than just a yes or a no?

It’s great to think about these questions right now. But Eva has to be patient. By the end of this course you’ll know answers to many of these questions!

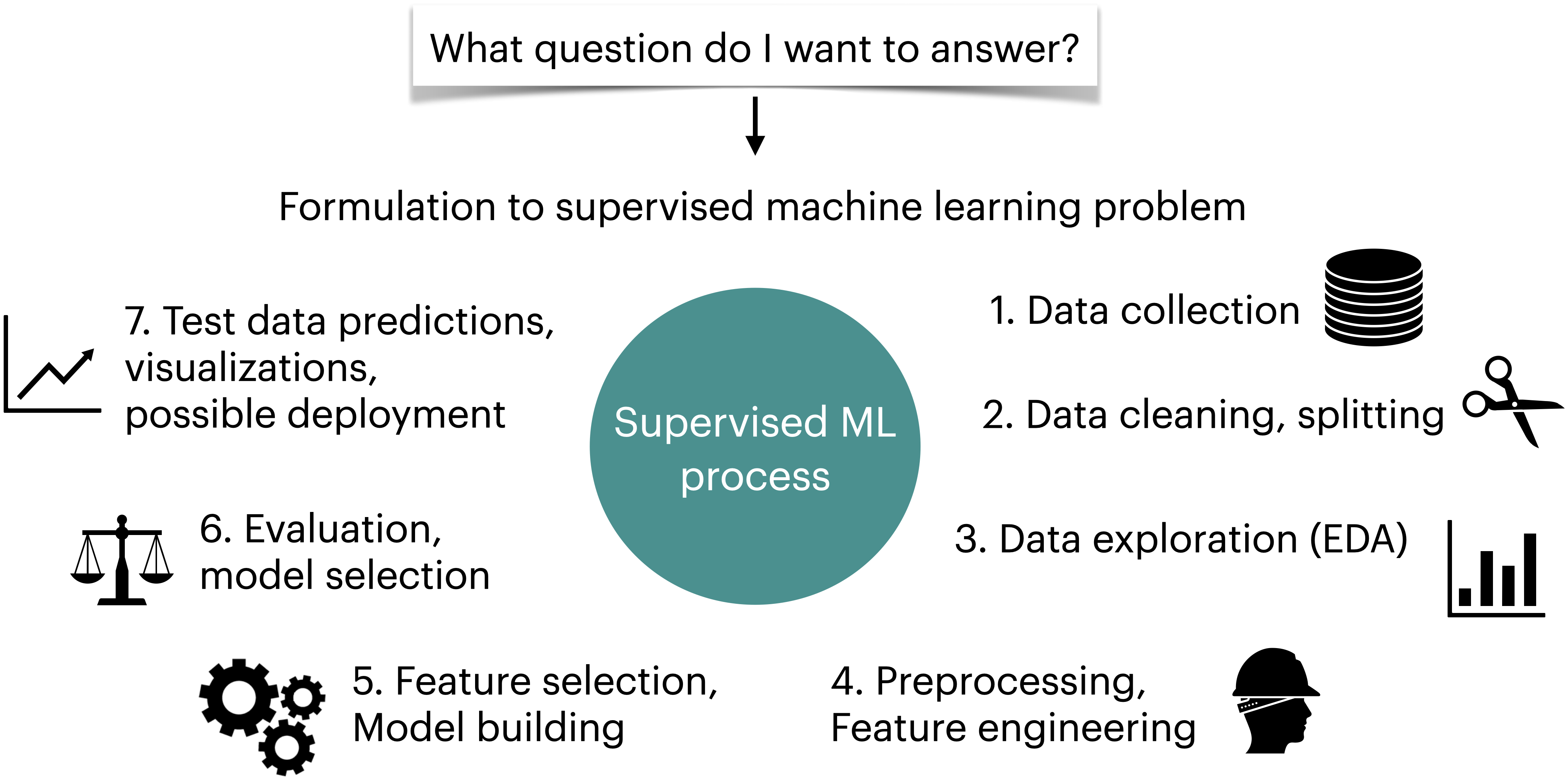

Machine learning workflow#

Supervised machine learning is quite flexible; it can be used on a variety of problems and different kinds of data. Here is a typical workflow of a supervised machine learning systems.

We will build machine learning pipelines in this course, focusing on some of the steps above.

❓❓ Questions for you#

iClicker cloud join link: https://join.iclicker.com/VYFJ

Select all of the following statements which are True (iClicker)#

(A) Predicting spam is an example of machine learning.

(B) Predicting housing prices is not an example of machine learning.

(C) For problems such as spelling correction, translation, face recognition, spam identification, if you are a domain expert, it’s usually faster and scalable to come up with a robust set of rules manually rather than building a machine learning model.

(D) If you are asked to write a program to find all prime numbers up to a limit, it is better to implement one of the algorithms for doing so rather than using machine learning.

(E) Google News is likely be using machine learning to organize news.

Summary#

Machine learning is increasingly being applied across various fields.

In supervised learning, we are given a set of observations (\(X\)) and their corresponding targets (\(y\)) and we wish to find a model function \(f\) that relates \(X\) to \(y\).

Machine learning is a different paradigm for problem solving. Very often it reduces the time you spend programming and helps customizing and scaling your products.

Before applying machine learning to a problem, it’s always advisable to assess whether a given problem is suitable for a machine learning solution or not.